Introduction

A new chapter of power is opened — in orbit

On a quiet March afternoon, our corner of the internet lit up: Relativity Space — the 3D-printing launch upstart that had raised $1.3 billion and sent one rocket to space — had been acquired. The buyer wasn’t a rival rocket manufacturer, nor a defense prime, but an individual — one of the architects of the modern (commercial) internet.

The purchaser and Relativity’s new chief executive was Eric Schmidt, the former Google CEO whose net worth hovers around $23 billion — and whose post-Alphabet résumé reads like a classified annex on AI, geopolitics, and national security modernization.

At first glance, his acquisition of Relativity seemed strange. Why rockets? Why now? Was it a defense play? Schmidt has spent the past decade underwriting talent pipelines in artificial intelligence, bankrolling software and semiconductor startups, and advising the Pentagon on digital transformation. All of that is to say — rockets seemed orthogonal.

The pieces didn’t add up, until Ars Technica’s Eric Berger put the puzzle together: Schmidt had allegedly bought the aspiring launcher to help us prepare for the supposed next wave of advanced computing…

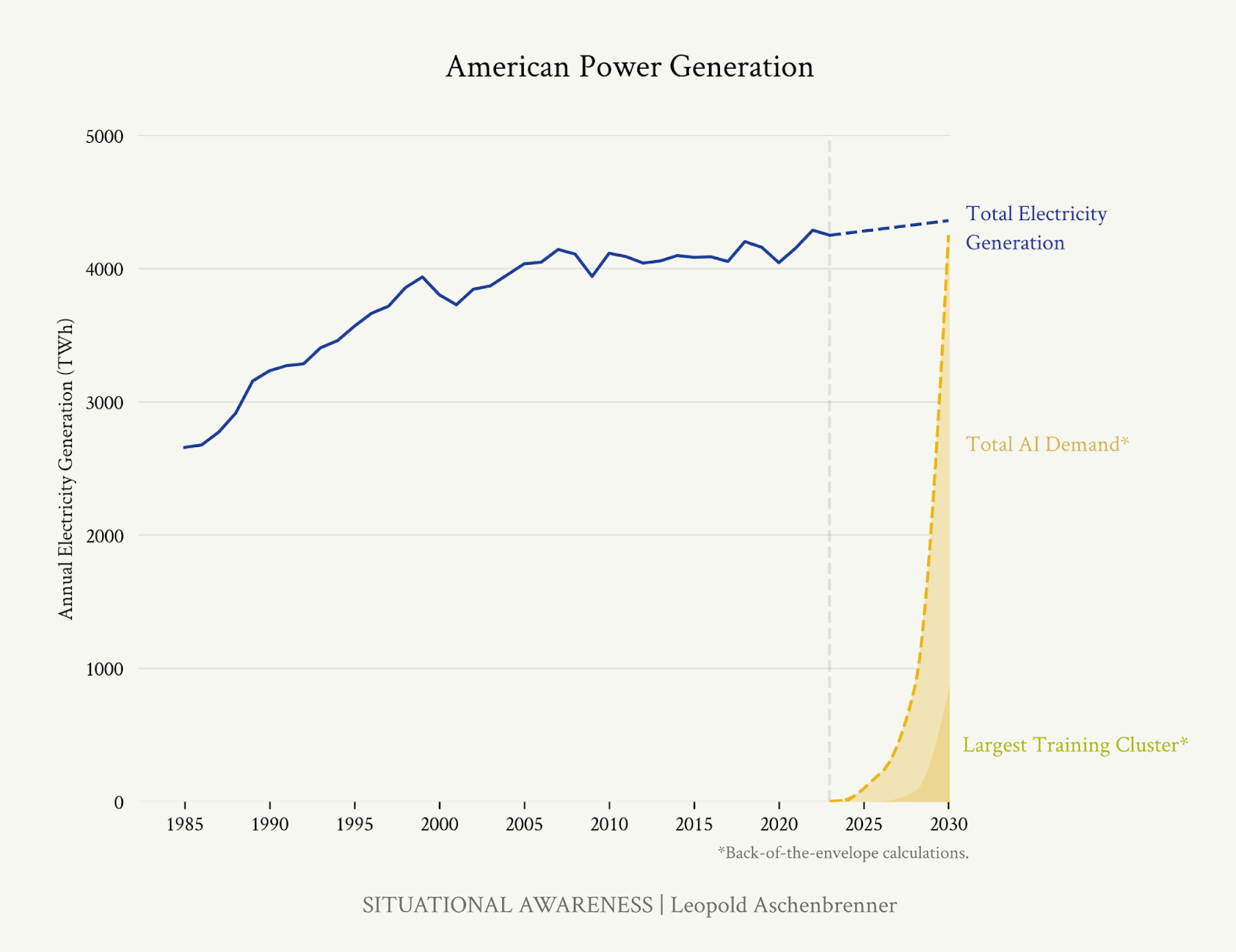

Some months prior, testifying before Congress, Schmidt had warned that AI demand could balloon data-center power from ~3% today to nearly 10% of US generation by 2030 — requiring an extra 67 GW, equivalent to 60 nuclear units. Berger tweeted his inference: Schmidt bought a heavy-lift launcher so he can loft the next cloud frontier into orbit.

Schmidt’s one-word reply — “Yes.” — was confirmation enough.

That throwaway reply pulled a thread that has been running through Pentagon whitepapers and Sand Hill pitch decks alike: the most coveted site for next-generation, megawatt-scale data centers isn’t another desert data hall or coastal megagrid — it’s space.

Stratagic planners imagine on-orbit analytics that shave decision chains from minutes to seconds, while hyperscalers picture GPU farms bathed in perpetual daylight. All the while, concerns mount over the prospect of space-based offensive weapons deployed by hostile adversaries, raising the stakes for orbital infrastructure security.

China just upped the ante — how real is it?

Last Wednesday, a Long March 2D rocket lifted the first 12 ADA Space Star Compute craft into low Earth orbit. ADA Space, a Chinese startup, and Beijing have marketed this as the opening volley in a planned 2,800-satellite “Three-Body Computing Constellation.” The 3-Body fleet will supposedly deliver an eye-popping 1,000 POPS (PetaOperations Per Second) of distributed horsepower by decade’s end.

Each spacecraft totes an 8 billion-parameter model, 744 TOPS of compute, and 100 Gbps optical cross-links. In aggregate, the debut flock supposedly can deliver ≈5 POPS on orbit. This is headline material, but crucial engineering facts remain under wraps, including thermal and radiation margins, storage sufficiency (30 TB for the whole flock), and whether the network can maintain tight node-to-node coherency. Until independent telemetry surfaces or additional tranches launch, the project sits in a quantum state — part breakthrough, part potential mirage.

Whether Beijing’s Three-Body Computing Constellation pans out is almost beside the point — space is now on the short list of places serious people look for the next jump in compute capacity.

Since orbital compute has moved from slideware to flight hardware, it’s impossible to ignore as a live strategic vector. We must examine the idea on its physical merits, not just its geopolitical sizzle.

Before we tally the long list of devilish constraints, let’s consider why orbit keeps resurfacing in board rooms and Congressional testimonies whenever the talk turns to gigawatt-class AI.

Section 001

Why is this so captivating?

- Abundant solar energy: In orbit, solar panels receive sunlight far more consistently than on Earth (no weather or atmosphere, and only brief orbital night periods at some orbits). This can yield many times more energy per panel than ground installations (though not 24/7, as is sometimes claimed).

- Efficient heat rejection: A spacecraft can radiate heat directly to cold space. Unlike terrestrial servers that need costly chilled water or A/C, an orbital data center dumps waste heat via radiators. Space offers a vast heat sink — in principle, allowing high-density computing without overheating.

- No land footprint: Orbiting servers don’t consume real estate. Thousands of satellites could operate without the land use conflicts or local environmental impact concerns plaguing giant Earth-based server farms.

- Global coverage: An orbital platform can service users over a broad area (or even the entire globe, as a constellation). Sensitive data or computation can be kept physically distant from Earth, which is enticing for secure data storage use cases and tamper-resistant processing.

So, in theory, a constellation of satellites or a large orbital platform could provide massive computing power unbounded by Earthly constraints.

It’s an exciting vision — but can it operate across the vast distances required, given the signal latencies that may lead to excessive power dissipation or unacceptably poor performance?

Realizing this version is anything but straightforward

The same space environment that offers abundant sunshine and a cold vacuum also imposes harsh physical and engineering constraints that terrestrial data centers never encounter: grappling with orbital mechanics; solar eclipses every 90 minutes (in many orbits); damaging radiation; continual degradation — and eventual destruction — from a constant barrage of space debris impacting the large surface areas required; extreme thermal swings; and the unforgiving economics of launch and space operations.

Then again, if we can overcome the challenges, space-based compute might one day provide clean, too-cheap-to-meter power and cooling for intensive high-performance computing and AI workloads.

This is a very big “if.” Before we get too carried away, it’s crucial to take a hard look at why this problem remains unsolved and what constraints must be conquered to make orbital computing practical.

Here’s the flight plan for the this antimemo:

- Define the viable workloads: Pairing orbital advantages with missions that could be economically viable–security, deep space science, remote operations, autonomous fleets, and long-horizon AI compute.

- Quantify the gatekeepers: Orbital mechanics, power duty cycles, heat rejection, space radiation doses—both naturally occurring and from man-made space weapons—link budgets, deployable mass, and more—we analyze each constraint based on current technology rather than aspirational cappabilities.

- Build the timelines: We map these constraints onto three development phases: the near-term “Crawl” phase (sub-10 kW demonstrators), the medium-term “Walk” (tens to as much as 100s of kW clustered nodes), and the long-term “Run” phase (hundreds of kW to megawatt-scale platforms requiring on-orbit assembly or giant constellations of smaller satellites).

- Call the shots: Finally, we translate physics into strategic guidance: identifying critical technology development areas, balancing visionary thinking with engineering realities, and providing perspective on the incremental path toward scaled spaced-based computing infrastructure.

To evaluate orbital computing as a viable strategy, we must think from first principles and seriously consider the trade-offs. Let’s dive in.

Section 002

Where orbital compute could actually win — and why is matters now.

It doesn’t make sense to put every workload in space. The idea of running servers above the Kármán line only survives if orbit delivers advantages that Earth physically cannot. Space is a physics-constrained environment whose pay-offs appear only when the mission profile outright breaks Earth-bound assumptions. Below is the unvarnished menu of use cases that clear that bar — or could clear it in near-future design cycles.

#1: A security edge measured in seconds

Modern Earth observation birds generate tons of data, while a fleet of commercial satellite imagers can churn out terabytes of raw pixels and sensor data every day. Trying to downlink all that raw data — which costs bandwidth — is expensive and self-defeating. And we know three things:

- U.S. government, commercial, and civil space SATCOM (satellite communications) pipes are already clogged.

- The data output of our remote sensing satellites has eclipsed our ability to send this data to Earth in a timely, usable manner.

- And yet, AI is in its golden age. Classification, identification, and detection algorithms continue to set new benchmarks and prove themselves worthy in high-stakes situations.

So, given these three things, a clear question emerges: why not move the analysis next to the sensor? If you embed a GPU-class processor into your satellite, you could use this AI accelerator, co-located with your sensor, to algorithmically identify “needles in the haystack.” With dedicated GPU racks upstairs, commanders can down-link the “needles” (AKA threat vectors, such as troop movements or missile launches) rather than the whole haystack. This buys precious response time and unclogs congested SATCOM pipes.

This is not a new concept nor hypothetical idea. Pathfinder missions from the U.S. Space Force and the Space Development Agency have already flown GPU-class “edge” computers alongside missile-warning payloads. The goal: use onboard processors to prove that a satellite itself can identify hypersonic threats. Plus, a wave of well-funded, promising, and hungry new startups are also angling to serve this market.

Beyond imagery…there’s plenty of chatter and buzz around companies and governments using space to store sensitive information, such as cryptographic keys or AI model weights. A 5-ton high-security vault circling 500 km overhead could function as a tamper-proof enclave. (Though, if we are being sticklers, unless this node is running algorithm workflows on the regular, you could more accurately call this “on-orbit memory” rather than compute.)

- Radiation‑protected commercial chips would be housed in an aluminum enclosure, communicating solely through secure optical links.

- Any unauthorized approach or tampering would trigger data-wiping protocols before interception is possible.

- Launching a three- to five-ton, ≈100 kW vault is not cheap, but at Starship‑class prices, it is trivially small compared to terrestrial alternatives.

Why did we list this #1? Time-sensitive security customers will likely pioneer orbital computing for one simple reason: organizations willingly pay premium prices for unique strategic advantages. If on-orbit processing can compress decision cycles from minutes to seconds, the competitive edge gained can justify the entire cost. All of the sudden, you have an early adopter helping to underwrite the development that eventually enables broader commercial applications.

#2: Build an orbital cloud for our telescopes, rovers, landers, and swarms

Space science has an odd problem: the farther we fly, the sharper our instruments get—but our data pipes stay stubbornly narrow. The James Webb Space Telescope (JWST) is the poster child. Its infrared cameras can easily collect ≈1 terabyte of raw data every day, yet the Ka-band link back to Earth can move only a fraction of that in real time. Mission planners and scientists must triage ruthlessly, discarding frames and compressing the rest, because they have no choice.

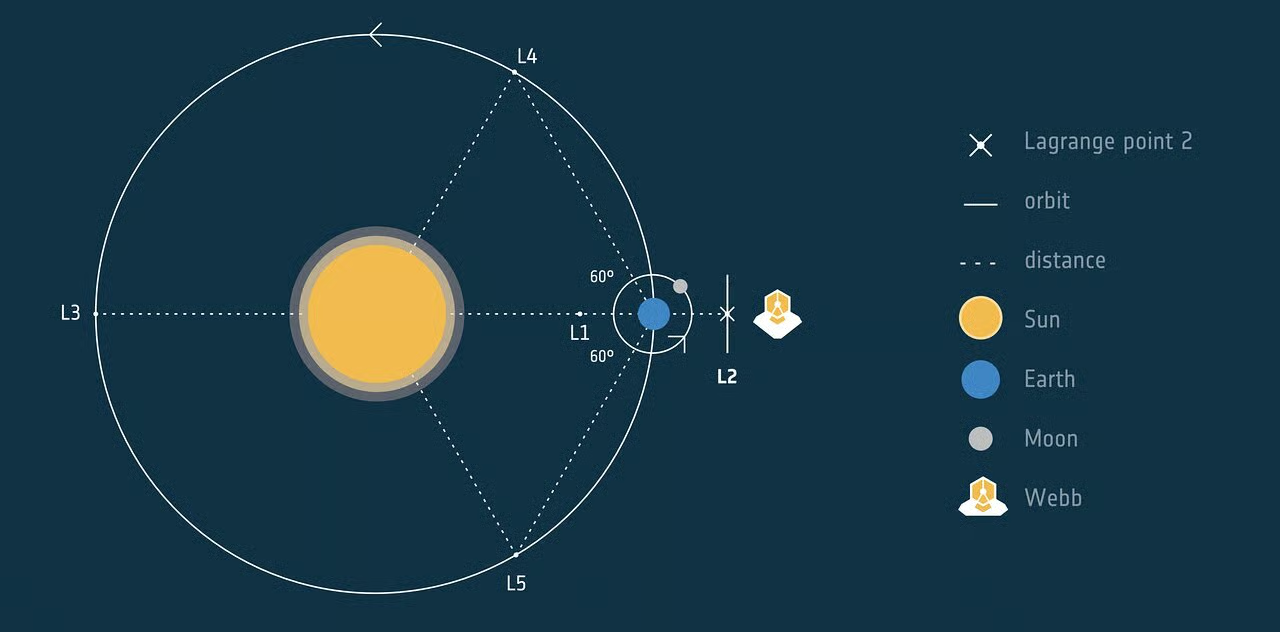

So, why not park a shared compute node near the telescope? We’re imagining a modest, school-bus-size server farm stationed at the Earth–Moon L1 point—always in sight of both JWST and mission control. You probably know where we’re going with this:

- We park our server farm at L1. From this perch, the hub maintains continuous line-of-sight to JWST, future large UV-optical telescopes, orbiters, and rovers.

- Instruments uplink their unfiltered sensor feeds a few hundred thousand kilometers to the node, which handles calibration, cosmic-ray scrubbing, and first-order science pipelines in situ.

- The node runs the same cross-correlation and de-noising algorithms we use here on Earth — then relays home only the high-value products.

(This is not a new idea! It looks a lot like how large radio telescope arrays on Earth use local supercomputers to correlate signals in real time.)

Why now? This all becomes feasible in the not-so-distant future because Moore’s Law is finally following us beyond low Earth orbit. For decades, space-qualified processors have lagged significantly behind commercial chips. That gap is slowly closing.

- NASA’s HPSC processor, promises ~100X performance gains over current space CPUs.

- Due to fly later this decade, the chip boasts something close to a modern laptop’s horsepower, while surviving the radiation and temperature swings of deep space.

- With HPSC-class silicon, the same ML models that classify galaxies in a data-center rack in Virginia can run inside a sun-shielded appliance 300,000 km away.

And we don’t need to limit ourselves to deep space. If we put an orbital computing hub in Earth orbit, we can support many missions at once — akin to a cloud server that rovers, probes, or space station experiments call upon for heavy calculations.

Using a combination of L1 and Earth-orbit nodes, and potentially hubs at other strategically critical points, future missions would be able to tap the same pool instead of schlepping a supercomputer — including rovers, orbiters, planetary drones, and future swarm missions.

- A Mars rover might beam a huge dataset to an Earth-orbiting node for analysis. The data center would then return summarized insights to the rover. (Given the multi-minute light-speed delay between planets, this approach would be suitable for non-time-sensitive processing tasks.)

- Future deep space “swarm” missions—dozens of palm-size probes sampling a comet tail or, say, studying the rings of Saturn—could dump a feed of raw data to the L1 hub, receive distilled science minutes later, and adjust flight paths autonomously.

The dividends of this model compound.

- More science per watt—sensors no longer idle for bandwidth.

- Tighter feedback loops—operators refine observing scripts the same day.

- Lighter spacecraft—smaller CPUs, batteries, and radiation vaults.

- Network effects—each new mission offsets the hub’s fixed cost, making orbital compute an infrastructure layer, not a one-off experiment.

Instruments operate flat-out instead of throttling for down-link; scientists tweak targets within hours, not days; vehicles shed mass and power by shrinking on-board avionics; and each new user strengthens the economics for the next.

Move the supercomputer next door to the sensor, and the universe stops waiting for our bandwidth.

#3: Civil security & remote ops — eyes everywhere, bandwidth to spare

The same logic that applies to accelerated hypersonic warning loops discussed above can apply to natural disasters.

Wildfire crews in California, pipeline controllers in Alaska, and rig operators in the Gulf all share the same pain: their assets and operations sit where fiber and 5G never will. An orbital compute node could give these domains a dedicated, always-on, and low-latency analyst…below, some illustrative examples:

- Forests on a hair-trigger…Infrared satellite constellations are already flying and regularly sweeping fire-prone regions regularly. With an onboard model and AI accelerator, the satellite itself can spot a 3-pixel heat bloom and tag a GPS point before smoke clears the treetops. Dispatch receives a 1-sentence alert with coordinates, not full imagery, and rolls trucks while the flames are still curb-height.

- Aviation’s volcanic tripwire…When Anak Krakatau belches ash, the first plume at 10 km altitude jeopardizes trans-Pacific routes. A spectrometer-equipped smallsat flags the SO₂ spike, estimates plume height, and pushes a notice to flight planners in the same update cycle that weather radars publish.

- Pipelines without patrol choppers. Long-wave hyperspectral imagers measure methane leakage signatures every orbit. Rather than charter a $10K-per-hour helicopter with an IR gun, operators get a daily line map pinpointing vent sites to within a football field.

- Arctic logistics gone digital. Research camps, rare-earth mines, and ice-breakers north of 70° N can piggyback on the same network. Ice-floe drift, incoming blizzard fronts, and diesel-tank levels are calculated aboard a high-inclination compute sat, then forwarded as a low-bandwidth situational brief that HF radio links can swallow.

The common win isn’t abstract “edge AI”—it’s actionable intelligence delivered to people and machines that have lived for too long in a data desert.

With orbital data-crunching birds on watch, the backcountry finally talks back.

#4: Autonomy at global range

Robots and autonomous vehicles thrive on data—but unless they are end-to-end, AI-capable, they trip up the moment they leave good backhaul. A speculative use case for space-based compute is to serve as an overhead edge cloud for drones, self-driving vehicles, and remote installations to connect to for heavy computation.

It’s important to note, though, that this concept faces steep challenges right out of the gates. The latency of satellite communication, even in LEO, is on the order of tens of milliseconds at best. This is too slow for split-second decisions. No one wants a self-driving car waiting half a second for a satellite to tell it if a traffic light is red. Plus, bandwidth is too limited for hundreds of vehicles to simultaneously offload high-definition sensor feeds.

An orbital compute layer, then, could fix the learning bottleneck rather than the steering loop. Some scenarios to help us paint the picture:

- Mining fleets, minus the depot fiber. Each haul truck at a Pilbara lithium site records ~30 GB of LiDAR and stereo video per shift. A mast-mounted optical terminal pushes the bundle to a low-inclination compute sat during a five-minute dusk pass. The node fuses every trace into a new centimeter-grade base-map and beams back a 150 MB delta before the morning pre-start—no trenching required.

- Uncrewed ships that learn between watches. A cargo drone mid-Pacific ships 5 GB of radar clips and AIS anomalies on its sunset contact. The satellite replays near-miss scenarios, tunes the detection network, and returns updated model weights (≈50 MB) on the pre-dawn pass. Helm reflexes stay local; overnight training keeps the AI sharp.

- Pop-up drone swarms that self-calibrate. A survey crew releases forty quadcopters into canyon country. The birds off-load raw point clouds to a line-of-sight truck antenna, which relays ~20 GB skyward. The compute node stitches a master mesh and pushes optimized waypoints—under 30 MB total—before battery swap #2.

To be clear, these are highly speculative use cases (and squarely in the realm of sci-fi). But it’s a very real possibility. Companies like Tesla–using the Starlink network—have mused about leveraging space infrastructure to update and coordinate fleets worldwide.

Our rule of thumb for this world: keep reflexes local and ship the homework sky-ward. With a few gigabits of high-rate uplink per day, robots that roam far from fiber can still graduate each night a little smarter than the night before.

#5: Silicon in the sunshine — the lure (and limits) of space AI factories

The most ambitious vision—and the one generating the hype—is to run large-scale commercial workloads in orbit. Those “AI factories” that Jensen Huang has been talking about a lot lately? This state-of-the-art infrastructure is what we’d eventually ship to space, according to this vision.

It’s the very same vision lighting up decks from Palo Alto to the Pentagon—and it’s why former Google CEO Eric Schmidt now owns a rocket company.

The promise sounds simple enough: today’s frontier AI centers gulp tens—sometimes hundreds—of megawatts and still sweat to stay cool.

Move the racks upstairs and the equation flips. Above the atmosphere, triple-junction solar arrays soak up unfiltered sunlight, while the surrounding 3 K vacuum is an ever-open radiator. Endless power plus effortless heat rejection means your orbital “AI factories” crunch models around the clock, free of grid bottlenecks or water-chiller headaches.

In practice, however, this dream faces the full gauntlet of challenges we’ll discuss in the next section.

Power, cooling, radiation, maintenance, and communication all become enormous hurdles when scaling up to data center-class workloads in orbit.

A single heavy-lift launch might loft a few tons of equipment—perhaps enough for a modest computing cluster (on the order of a few kilowatts of IT load). But a modern terrestrial data center is measured in tens of megawatts (for example, ~1 MW can power a small data hall, 50 MW+ for a large facility). To approach that scale in orbit would require multiple orders of magnitude more mass and, most likely, on-orbit assembly of large structures. This quickly becomes a multi-$B mega-project rather than a lean startup.

We will need to walk before we run. A true space hyperscale remains an ambition measured in decades, not product cycles.

Keeping that skepticism handy, and with this context in mind, let’s now examine the core engineering challenges that will determine the feasibility of orbital computing.

While the vision of space-based computing is compelling, successfully navigating these hurdles will ultimately separate viable projects from speculative ventures.

Section 003

Engineering challenges and constraints in orbit

A space-based computing system isn’t simply an “offshore” data center; it’s a completely different beast where nearly every assumption of terrestrial IT is inverted. These engineering challenges explain why, despite compelling theoretical advantages, no one has yet built a cloud in space at scale.

In this section, we’ll examine the following critical constraints:

- Orbital mechanics: How altitude choice fundamentally determines latency, coverage, radiation exposure, and operational lifespan

- Power generation and storage: Managing solar collection, eclipse periods, and energy storage in the harsh space environment

- Thermal management: Radiating waste heat in vacuum without the luxury of air or water cooling

- Radiation effects: Protecting sensitive electronics from cosmic rays and solar events that damage or disrupt computing hardware

- Structural considerations: Assembling, deploying, and maintaining large-scale infrastructure in microgravity

- Communications: Overcoming bandwidth limitations and atmospheric interference when connecting to Earth

- Debris and safety: Mitigating collision risks and ensuring responsible end-of-life disposal

Solving these problems is not impossible, but each requires cutting-edge engineering. Often, solving one problem makes another harder. It’s a delicate balancing act under harsh constraints, creating a multidimensional optimization problem that demands honest assessment of trade-offs.

Let’s begin with the fundamental decision that shapes all others: where to position your orbital data center.

Orbital mechanics: address sets the mortgage

Okay, so you say you want to build a space supercomputer. Great, what next?

One of your first decisions: pick an orbit. That choice hard-codes latency, lighting, radiation, drag, and—by extension—your capex and opex. You’re trading one pain point for another, so pick the pain you can underwrite.

Geostationary Orbit (35,786 km altitude): A satellite in GEO appears fixed over one spot on Earth’s equator, which is great for continuous coverage of a region. GEO offers essentially full-time sunlight (except brief eclipses around the equinoxes) and zero aerodynamic drag (as it’s above the atmosphere completely). This means no orbital decay and no need for reboost rockets, and only minimal batteries to cover the short eclipse seasons.

The huge PITA is latency. Since GEO is ~36,000 km away, one-way signal time is ~120 milliseconds. A round-trip (ground up to GEO and back down) is roughly 240 ms just in light travel time, which is nearly half a second when accounting for networking overhead. Latency is therefore physics-capped: ~120 ms one-way, ~240 ms round-trip before switching delays.

With GEO, the user experiences a noticeable lag (imagine trying to game with half-second ping times!). GEO might work when bandwidth beats time — batch processing, bulk analytics, and delay-tolerant tasks — but it’s a D.O.A. for latency-sensitive workloads. Additionally, GEO slots are heavily regulated and crowded with satellites — any large new platform would require a fair bit of international coordination to avoid radio interference.

GEO satellites are typically moved to a higher “graveyard orbit” at end of life, since bringing a dead bird down from GEO is impractical. Repair is effectively impossible, and if something fails in GEO, it’s stuck up there forever. A defunct compute platform in GEO could become a piece of very long-lived space junk.

Lastly, one more thing: launch to GEO is ~3× LEO pricing.

Low Earth Orbit (300–1000 km altitude): LEO is attractive for its lower latency and ease of access. At a few hundred kilometers up, a satellite has a one-way signal time on the order of only 1–4 milliseconds. Even including processing and network hops, it’s feasible to get <50 ms round-trip latency from an orbital server to a ground user—comparable to terrestrial long-distance internet links. This is why constellations like SpaceX’s Starlink (at ~550 km) and OneWeb (at ~1200 km) aim to provide broadband with fiber-like latencies.

Launching to LEO is much cheaper and easier than reaching higher orbits. And we can get a lot of payload to LEO with current rockets (along with returning and/or servicing spacecraft, in some cases).

If a LEO orbital data center fails or it’s retired, it can be deorbited with extreme care and quite safely, preventing long-term debris buildup.

- Below ~600 km altitude, orbital decay is a built-in “cleanup mechanism” and atmospheric drag will gradually pull the satellite down over the course of years.

- At ~500 km, an object might stay in orbit for a decade or two before reentry.

- At 300 km, it would reenter in a matter of weeks to months.

(In all deorbit cases, extreme care and orbital decay planning must be taken for large, heavy objects, due to the potential damage to humans and infrastructure from out-of-control heavy remnants not guided into a planned safe landing spot.)

This is a really big deal that can’t be ignored. I’ve deorbited giant objects, and with all the planning, it was sweaty palms until safe reentry was validated—even though we had precision and detailed planning. Dan Goldin

Large data centers will have a very high thermal mass that might not fully burn up upon reentry. Should this occur, it would be nice if we ensured that the data center falls in a place where there are no people or infrastructure. But this thermal mass issue also means any LEO data center needs regular propulsive boosts, or an acceptance that it has a limited lifespan.

LEO’s downsides = coverage and continuity. A single LEO satellite zips around the Earth in ~90 minutes and is over a given ground spot for only a few minutes each pass. So, one compute satellite would not be continuously accessible unless you buffer tasks and send results later. For real-time service, you’d need a constellation of many satellites networked together to ensure that at least one is always in view of the user or ground station. (That said, careful analysis must be undertaken in designing the system to ensure there aren’t long paths for signals to travel, causing distortions and unacceptable latencies and associated power dissipation).

This design drastically increases complexity—now you’re basically building a distributed cloud across dozens of moving nodes, plus adding inter-satellite links or a handoff mechanism for data. It’s not the easiest of tasks, but it is doable. (Starlink’s internet service uses thousands of satellites to provide near-continuous coverage). Nonetheless, for a data center application, these requirements mean much higher cost, design complexity, and coordination.

Another big issue for LEO is orbital lighting and eclipses.

- Unlike GEO, a low orbit satellite spends a significant fraction of each orbit in Earth’s shadow (night). For a typical orbit around a few hundred km altitude, roughly 25–35% of each 90-minute orbit is darkness (the exact figure depends on the orbit inclination and season). This means we have on the order of 30 minutes of eclipse time, every orbit.

- Sun-synchronous orbits (popular for Earth observation satellites at 500-800 km) are often said to have “continuous sunlight,” but this is a bit of a misconception. A dawn-dusk sun-synchronous orbit (where the satellite tracks along the day-night terminator) can maximize solar exposure, eliminating nighttime at certain times of year. Still, even these orbits still experience some eclipse periods (especially around equinox).

- In practice, no low-Earth orbit enjoys 24/7 sunlight year-round. 70-75% illumination is more typical.

The implication: any LEO data center must be equipped with serious energy storage (batteries) to run through the eclipse portion of each orbit — or you must scale back operations during every night period. This is a non-trivial design factor that simply doesn’t exist for terrestrial data centers.

And, one more LEO headache worth noting: it’s within the denser debris environment. Thousands of active and defunct satellites, rocket bodies, and bits of junk are lurking up there, particularly in the 400–800 km band Collision risk must be actively managed, and satellites often need propulsion to dodge conjunctions. A large structure in LEO could become a debris magnet (which we will return to later on).

Medium Earth Orbit (MEO) and Highly Elliptical Orbits (HEO): We have intermediate options between LEO and GEO. GPS satellites orbit in MEO at ~20,200 km. A MEO orbital data center would have latency in the 10s to low-100s of milliseconds (perhaps 50–150 ms depending on altitude). Beats GEO, but still worse than LEO. MEO would see longer sunlight periods and shorter eclipses than LEO, easing power storage needs. Plus, fewer satellites would be needed for continuous coverage, since each has a wider view of Earth. But launch is costlier and radiation is more severe. Many, but not all, MEO satellite trajectories pass through the Van Allen radiation belts (depending on orbit geometry and altitude selected).

We can also consider Highly Elliptical Orbits (HEO), like Molniya or Tundra orbits, which have long dwell times over certain regions. These could provide quasi-continuous coverage to high latitudes (where GEO signals are weak) and can be configured to have continuous sunlight during critical parts of the orbit.

HEO orbits might offer niche advantages for regional orbital computing (for example, covering far northern territories with near-constant visibility), but they introduce complexities in orbital dynamics and uneven communication latency as the satellite speeds up and slows down.

VLEO: Very low altitudes (below ~300–400 km) would minimize latency and launch cost further, but this low, the atmospheric drag becomes so strong that a satellite would deorbit within months or even weeks without continuous propulsion.

(Some Earth observation sats do operate in very low Earth orbit around 250–300 km and historically lower, but today they rely on electric thrusters, have aerodynamic form factors, and are constantly fighting air drag. This is feasible for a small imaging sat but unlikely to be practical for a massive data center platform.)

In sum…Orbit is the ultimate trade-space. The high ground offers a life that is blissfully drag-free with stable operation and nearly continuous sunlight, but it’s gonna cost you: every packet carries a quarter-second delay, launch costs you 3X, and it’s difficult if not downright impossible to service your data center if anything goes wrong.

Current efforts favor LEO (500–600 km) as the sweet spot: low latency, moderate launch cost, manageable ~30% eclipse duty cycle, and decently long orbital life (years to decades) with some reboosts.

- A recent project proposed to position its system at around 600–800 km with the specific goal of shortening eclipse periods while still staying low enough for latency and eventual deorbit.

- This is a clear example of orbit trade-off thinking.

So, which altitude wins? It all depends on the workload. If your customer chafes at 100 ms delays, LEO is non-negotiable and you swallow the eclipse tax. If uninterrupted power tops the checklist, you climb until the shadow appears and stomach the lag.

Batteries, shielding, propulsion, thermal margins—each knob you twist solves one headache and inflames another. There is no universally “best” orbit, only the least-bad compromise that lets your mission pencil out.

Power generation, storage, and intermittency…How do you keep the lights on up there?

Power is the lifeblood of any compute platform. In space, this means your data center is solar-powered, since large-scale fusion systems have not yet been qualified for safety and cost effectiveness.

“Free solar” is a sales slogan in space, but it is not a business model. Yes, sunlight hits an orbital panel with ~30% more punch than it does in Phoenix and you bask in the rays almost year-round in GEO. But in LEO—our latency sweet-spot—every 90-minute lap includes a 25-35% blackout.

It’s true that an orbital solar array can yield significantly more energy per panel than the same area on the ground, which might only see, say, 20-25% of the sun over a day on average (after nights, weather, and cosine losses).

In some cases, an orbital panel might collect 5-10× the annual energy of a typical Earth-mounted panel of equal size. But it is definitely not unlimited nor 24/7 without interruption, especially in LEO. And it’s not cheap to get that solar panel to orbit!

LEO rule of thumb: Your data center’s power system design must account for eclipse downtime. In a LEO scenario with ~30% eclipse each orbit, if your computers require 10 kW of continuous power, you have to generate enough surplus energy during the sunlit 60 minutes to run the 10 kW and charge batteries for the 30-minute dark period.

That typically means oversizing the solar array beyond the simple average load. Furthermore, you need a battery system (or other energy storage system) that can carry the full load for that ~30-min eclipse (or whatever duration, based on orbit).

- The ISS, for example, uses large batteries to power life support and systems through its ~35-minute night each orbit.

- For a data center, if you wanted uninterrupted computing, you’d plan similarly: size the batteries to cover the peak power draw for the entire eclipse. (But, do note that this adds considerable mass and complexity).

High-power, space-rated batteries (typically lithium-ion) have decent energy density but are quite heavy and require delicate treatment (as thermal runaway in a satellite is a nightmare). You may alternatively accept intermittent operation — for example, perhaps certain batch jobs could pause during eclipse if that’s tolerable. But many computing tasks expect steady, 24/7 availability, so let’s assume we design for continuous power.

Some quick back-of-the-napkin math: Let’s suppose we’re planning for an orbital data node that needs ~100 kW of electrical power for its systems. This is a very modest data center by Earth standards, but it’s a good starting point.

- If it’s in LEO with ~70% sunlight, then to get 100 kW average, the solar arrays in sunlight must generate more like 140 kW to supply immediate needs and charge the batteries.

- Modern space-grade solar panels (multi-junction gallium arsenide cells) can achieve around 30% efficiency and perhaps ~200 W of power per square meter in full sun.

- So 140 kW requires on the order of 700 m² of solar panels (roughly a square ~26 m on a side, if in one plane — but likely distributed on multiple arrays).

- The mass of that array, including deployment structure, might be on the order of a few kg/m². Highly optimized arrays can achieve ~150 W/kg (~6.7 kg/kW).

- At 140 kW, that’s ~930 kg of solar panels.

- Next, the battery: to supply 100 kW for 30 minutes (50 kWh of energy) without dropping below, you’d need at least 50 kWh of storage (plus margins).

- Space-rated lithium batteries might store ~100 Wh/kg, so 50 kWh is ~500 kg of batteries (this is roughed out: newer chemistries could improve, but also add packaging overhead).

Already, we’re at ~1.4 metric tons in power hardware alone for a 100 kW system, and we haven’t included power control electronics, cabling, and the like.

If we aimed for a higher orbit with shorter eclipses, the battery requirements ease a bit (as there’s less duration to cover), but the total solar flux per orbit is similar unless you go all the way to continuous sunlight.

- GEO has no night for ~9 months of the year, but for ~6 weeks around each equinox, Earth eclipses the sun for up to ~70 minutes each day.

- That’s only ~5% downtime averaged over a year…which is much better than LEO. So, a GEO platform might only need to handle a short eclipse or possibly have a backup fuel cell for those periods.

- But nothing’s free: in GEO, recall how latency balloons, radiation cooks your cells faster, and your launch bill triples.

- Some highly inclined orbits can be arranged to have periods of constant sunlight (the ISS occasionally goes through periods of continuous daylight for several days due to orbital precession). But it’s complex and typically temporary.

Continuous power vs. eclipse cycling is a fundamental trade-off.

Bathing in perpetual sunlight isn’t all upside. If a satellite never enters Earth’s shadow, it means its radiators never get a break either. They are radiating heat while also potentially being illuminated by the sun continuously. This can actually make thermal management harder.

In LEO, the dark period of each orbit lets radiators cool off and allows sensitive components to shed heat to the 3 K background more efficiently.

So, counterintuitively, a short eclipse can act as a helpful cool-down cycle, as long as you have power stored to ride through it.

The key point is that any orbital computing design must explicitly account for power intermittency and storage. This is a challenge terrestrial data centers only face during extenuating circumstances, like a grid outage (where they use generators or UPS backup).

In space, this is a routine part of every orbit.

Fission: the Eclipse-Killer—at a Price

If you really want 24/7 juice, you would ditch the solar wings and fire up a reactor. This idea isn’t science fiction—Soviet “Topaz” units once powered radar satellites, and NASA’s Kilopower program is tooling small fission stacks for lunar outposts. A compact reactor would shrug at orbital night and pack far more watts per kilogram than photovoltaics–in theory. But every watt of fission heat needs an even bigger radiator, the reactor itself is heavy, and you inherit launch approvals, safety protocols, and planetary-protection politics.

Near-term commercial concepts will stick to solar and batteries. Nuclear makes more sense for far-flung, deep-space nodes or a future megawatt-class station where eclipses—or distance from the Sun (e.g., Mars)—leave no other options.

The Slow Fade: Why Your 100 kW Turns Into 80 kW

Not to beat a dead horse and tell you something you don’t already know — but space is unforgiving. Rdiation pits the solar cells, micrometeroroids pepper the cover glass, and panel output slides a few south every year (to the tune of 200-300 basis points or so, depending on orbit radiation levels). Batteries also degrade with cycling. So, your 100 kW starting point might recede to 80 kW after several years. Designs have to include margin or replacement plans for these components.

The bottom line: power is a major mass driver for any orbital compute project. Achieving even modest power levels (tens of kW) demands large solar arrays and heavy batteries.

Add that mass penalty to the already hefty wings and battery packs, and even a modest 10s-of-kW node looks chunky. Scale to a megawatt design, and we are talking stadium-sized structures—or we’d need a step-change like on-orbit panel manufacturing or beamed power.

No surprise, then, that early prototypes are bound within the single-digit-kW range: crawl, then walk, and then we can start thinking about cloud scale.

Thermal management in vacuum: Every joule needs a radiator

On Earth a server farm sweats and an air-handler hums—and the problem of heat dissipation is solved. In orbit, the vacuum strips you of these luxuries and becomes one of your primary design headaches.

Vacuum is both friend and foe: it’s cold out there (3 K background temperature), but the only way to dump heat is via radiation (there’s no air or water to carry heat away by convection). High-performance computing equipment generates a lot of waste heat in a small volume, so an orbital data center must effectively be covered in radiators or use clever thermal tricks to avoid overheating.

The International Space Station is a cautionary tale. Its ammonia loop and eight billboard-size radiator wings shed ~70 kW nonstop. Those wings plus plumbing weigh several tons. What would the radiator look like for a modest orbital cloud (say, 100 kW)?

The required radiator size is set by basic physics — the Stefan–Boltzmann law of blackbody radiation.

- The power radiated per area grows with the fourth power of temperature (P/A = σ T^4).

- If you can operate your radiator hotter, it emits more watts per square meter, allowing a smaller area.

- However, your electronics and coolant must run at a temperature above the radiator temperature.

- Alternatively, you need a heat pump to raise the temperature, which itself consumes power.

- Typically, spacecraft radiators run somewhere in the range of about 300–350 K (27–80 °C) for efficient heat rejection. At ~350 K (77 °C), a black surface radiates ~460 W/m² (if perfectly oriented to cold dark space).

- Real radiators aren’t 100% efficient blackbodies and don’t always have perfect view to 0 K (they may see Earth or sunlight at times), so in practice you might get on the order of a few hundred watts per square meter of radiator at best.

- A rule of thumb: roughly 0.1 m² of radiator might be needed per kW of heat to reject, if operating around 350 K. That implies ~100 m² of radiators to dump 100 kW of heat—which is indeed in the ballpark of the ISS example (70 kW with a few hundred m²).

No free lunch: Put 100 kW of compute in orbit and you must dump ~100 kW of heat. At typical spacecraft temperatures that calls for roughly 100–200 m² of radiator surface, a cooling deck that is larger than a tennis court.

Kick the workload to 1 MW and the math explodes into thousands of square metres, edging toward soccer-field real estate. All the while, every extra panel is mass to launch, drag to fight, and shiny acreage for micrometeoroids to perforate.

Orbit complicates the picture.

As mentioned, in LEO, an orbital node would glide through 30 minutes of darkness every 90 minutes. The day-night cycle is intense—radiators chill at a balmy –100°C in shadow, then the Sun slams them with +120 °C light, cycling the structure between sauna and freezer.

Go higher and the Sun never sets—great for power, but brutal for cooling because the panels lose their nightly breather. Designers juggle louvers, rotating vanes, and variable-emittance coatings just to keep temperature swings inside the survival envelope.

As discussed, a low orbit that forces a “cool-off” every 90 minutes can be helpful to bleed off heat — but it comes with the power penalty of no sunlight at that time. This interplay of thermal and power design is a unique challenge for space data centers.

Another often-forgotten consideration — don’t forget the heat trapped inside the box. Without air, cooling inside the spacecraft is harder as well.

Down here, we strap fans to motherboards and call it a day; in vacuum, a fan is just a motor wasting watts. You can’t use air convection effectively in a sealed satellite. High-power electronics in space typically sit on liquid-cooled cold plates or use heat pipes to conduct heat to the structure. While the ISS uses pumped ammonia, satellites often use loop heat pipes or two-phase cooling for components like radar or electric thrusters.

For a data center, we’d likely need a robust internal liquid cooling loop carrying heat from CPUs/GPUs out to the radiators. This means pumps, fluid, plumbing—all of which add mass and new potential points of failure. The absence of simple air cooling is one reason current space computers run at very modest power levels (tens of watts).

Scaling up means essentially building the equivalent of a building’s HVAC system into a satellite.

In short…thermal management in vacuum is a major engineering puzzle. It’s not as simple as saying “space is cold, so cooling is easy.” Space gives you the opportunity to radiate heat to 3 K, but you have to provide enough radiator area and design the thermal circuits to get the heat out in the first place.

Every watt of computing requires carefully routing a watt of heat to a radiator, which tends to enforce relatively low power densities in space systems (or else…things melt). Advanced solutions like droplet radiators — i.e., spraying a fluid and radiating heat as IR, then recollecting it — or phase-change materials have been theorized for high-power spacecraft, but these are still experimental concepts.

Any near-term orbital compute platform will likely use well-proven methods: big passive radiators, fluid loops, and maybe some clever geometry to dump heat efficiently. This is doable, but again, it means mass and size—which factor into launch and deployment costs significantly.

Radiation and reliability: Cosmic rays don’t care about uptimer

Space is a shooting gallery filled with high-energy particles of varying density and energy, depending on how far above the Earth’s protective gas atmosphere you are.

- Outside Earth’s atmosphere (and partly outside the magnetosphere, depending on orbit), electronics will be bombarded by high-energy particles: cosmic rays from deep space, energetic protons and electrons from solar flares, and the trapped radiation belts around Earth.

- Over time, this radiation causes cumulative damage known as total ionizing dose (TID) and can also induce instantaneous failures like bit flips (single-event upsets) or burnout of circuits from heavy ion strikes.

- For a computing payload expected to operate reliably for years, radiation is a silent but serious adversary.

To underscore the stakes, consider Vladimir Putin’s recent saber-rattle: a public musing that a single nuclear detonation in orbit could wipe out every satellite in low Earth orbit.

The resulting “prompt dose”—an intense, broadband electromagnetic pulse—wouldn’t just fry spacecraft electronics; its cascading effects on ground infrastructure could be even more catastrophic. The scenario is hypothetical but technically sound, and it reminds every mission planner that radiation threats aren’t limited to cosmic weather—they can be man-made and instantaneous.

Commercial silicon is built for data center A/C, not cosmic buckshot.

Typical commercial-grade computer chips are not designed for radiation. A burst of particles can flip bits in memory or logic, causing software errors or crashes. More insidiously, prolonged exposure gradually shifts transistor parameters or even fries components.

The widely used BAE Systems RAD750 radiation-hardened processor (a space-hardened variant of an old PowerPC CPU) can survive on the order of 200,000 to 1,000,000 rads of total dose.

- By contrast, an ordinary modern server CPU would be severely damaged by a tiny fraction of that dose — potentially just a few krad.

- This is why most satellites either use rad-hardened (rad-hard) electronics, which are bespoke and purpose-built to tolerate radiation, or they shield and/or extensively error-correct the sensitive parts.

Rad-hard components come with predictable trade-offs: they lag a decade of Moore’s Law and cost six figures per board for 200 MHz performance (laughably slow by modern cloud standards).

Newer space-grade CPUs (like the forthcoming HPSC or some FPGA-based systems) will improve this, but a performance gap will always persist.

Alternatively, you could swing the other way and try to fly commercial off-the-shelf (COTS) CPUs/GPUs and wrap them in millimeters of aluminum or tantalum, add ECC everywhere, and let watchdog logic reboot anything that hiccups.

- This is the approach some experimental missions try: take a regular processor or even an AI accelerator, and add a heavy vault of shielding plus error-correcting memory and fault-tolerant software to ride through glitches.

- Shielding is somewhat effective — a few millimeters of aluminum, or better yet Tantalum, can dramatically cut certain radiation doses — but it’s heavy.

For a large data center in space, if you tried to enclose the whole thing in a thick vault, the mass would weigh you down. A nuanced design might shield only the most sensitive parts (memory chips, etc.), and rely on the fact that lower orbits (LEO) have less radiation than higher orbits (thanks to Earth’s magnetic field deflecting some cosmic rays).

While shielding helps (especially in LEO, where total dose can be held to a few to tens of krad over several years), mass climbs fast and the belts in MEO and GEO will still cook unprotected gear by the hundreds of krad.

Orbit choice, then, is a dose knob. Below ~600 km Earth’s magnetosphere does most of the blocking—except over the South Atlantic Anomaly, where every pass is a mini stress-test. Higher altitudes step outside that umbrella, and GEO birds plan for ~15-year lifetimes only by pairing thick vaults with rad-hard brains.

An orbital data center parked that high would either shorten its mission or haul up a lot of tungsten.

Radiation also affects reliability and maintenance strategy.

On Earth, servers fail from time to time due to normal issues. The solution is simple: you swap out the faulty gear.

In orbit, you likely can’t repair or replace a failed component, so the system must be highly fault-tolerant. This means redundant computers (if one glitches, another takes over), using error-correcting code memory and storage to catch bit flips, and designing software that can checkpoint and restart tasks if something goes awry.

Space systems engineers have a lot of experience with these types of solutions — for instance, using triple-modular redundancy (three processors voting on results) to detect errors.

Applying these techniques to an orbital data center will be necessary if using any modern high-density chips, and it will not be cheap.

Even after taking all of the above into account…

…cosmic ray “ghost faults” will slip through and hit at random. Even if the chip isn’t permanently damaged, a single high-energy particle could crash a computation or corrupt data.

In a node handling important workloads, this is a major concern for data integrity. Techniques like scrubbing memory, majority voting on computations, or simply running certain tasks multiple times for verification might be needed in the software stack to ensure correctness.

Lastly, radiation can degrade other materials too—panels bleach under proton rain, plastics embrittle, sensors glaze—in general, hardware ages in dog years. So the entire platform’s longevity might be limited by radiation unless extra precautions are taken.

One practical answer is planned obsolescence: design for a 3-5 year LEO tour, deorbit, and launch fresh boxes using the latest-and-greatest COTS gear. This cadence might align better with the rapid advances in computing hardware anyways.

With an agile engineering approach and regular upgrade cycle — deploying new spacecraft as old ones fail or become obsolete — you trade launch dollars for Moore’s-Law speed-ups and avoid lugging 20 years of shielding.

The downside is (obviously) the recurring launch costs and potential interruption of service during replenishment.

In sum: Any orbital-compute venture must explicitly budget for radiation.

You can pay in one of three currencies—performance (rad-hard but slow), mass (shielded COTS), or refresh cadence (launch-and-replace every few years). Layer redundancy on top, or your uptime will melt under the particle hail.

If an orbital-compute pro forma glosses over these expenses, it looks good only as presentation slide and won’t survive real-world physics.

Structural scale, deployment, and maintenance: Engineering challenges of large-scale orbital infrastructures

Building anything in space is difficult; building a large, complex structure like a data center in orbit magnifies that difficulty. A significant challenge for scaling orbital compute is simply getting enough hardware up there and assembled in a functioning configuration.

Current satellites that host computers (like a small server) are typically on the order of a 300 kg or less — basically the size of a mini-fridge or small closet. To have a data center-like capability (with substantial solar arrays, radiators, and multiple server racks), you might be looking at a platform mass of several tons at least.

- For example, an analysis of a ~100 kW orbital data center concept showed an estimated mass of 3–5 metric tons for the satellite, including:

- solar panels (~700 kg for ~100 kW generation),

- batteries (a few hundred kg),

- radiators (~1000 kg),

- structure, cooling loops, computers, and more…

- That’s actually within the launch capacity of a single heavy-lift rocket — a promising sign. A vehicle like SpaceX’s Starship could potentially loft 50+ tons to LEO, making it conceivable to launch an integrated 1 MW system (broken across a few launches perhaps).

Raw launch capability is on the horizon. But if we scale beyond one launch, we face a new challenge: we’re entering the realm of in-space construction. Any discussion of multi-megawatt “station” in orbit likely means assembling modules in space — bolting together solar-truss sections, kilometer-long radiator wings, and modular server pods—all robotically, because shuttle crews aren’t coming back.

- The ISS model (assembled piecewise over many Shuttle flights, with astronauts and robotic arms doing the construction) is extremely expensive and labor-intensive.

- We can safely rule it out for commercial space cloud/supercomputing/AI factory ventures.

- (Also, every hinge, latch, or inflatable boom is a single-point risk, and telecom history is littered with satellites that died because one panel refused to unfurl.)

Since traditional EVA crews are too expensive, the industry is looking towards automated assembly: robotic arms that mate modules, and deployable or inflatable structures that blossom once they clear the fairing.

A data center might benefit from these innovations. The dream scenario: launch a compact, school-bus-size bundle, hit the unfold button, and watch it bloom into a kilometer-long solar wing or a radiator field big enough to cool a steel mill.

The challenge: Those tricks could let an orbital cloud grow by orders of magnitude without flying a dozen heavy-lift stacks—but every hinge, motor, and bladder you add is another potential “failed-to-deploy” headline.

Microgravity removes weight but not dynamics. While orbital structures don’t need to support their weight against gravity as on Earth, they remain subject to complex dynamic forces. Rotation, thruster firings, and thermal expansion/contraction create oscillations that must be managed. For very large structures spanning kilometers, even Earth’s gravity gradient (the slight difference in gravitational pull across the structure’s length) can induce bending and vibration.

A large orbital platform requires sophisticated designs to handle flexing and oscillation. Uncontrolled vibrations in components like solar arrays can misalign critical systems including communication antennas, solar panels, and thermal radiators.

The ISS demonstrates this challenge—despite being “only” ~100 m across, it requires specialized damping systems to counteract oscillations caused by routine activities like astronauts rurnning on treadmills or spacecraft docking.

Now factor in micrometeoroids and orbital debris. Big surface area means big target. Tiny paint flecks at 10 km/s can pierce radiator tubing; a 5 mm fragment can sever a power harness. A large area (solar panels, radiators) is almost guaranteed to be hit by small debris over time.

The ISS’s radiators and solar panels have many small holes from debris strikes over the years, and a few leaks in the cooling system have been traced to suspected micrometeoroid hits.

For a data center, a stray debris strike could knock out a radiator panel or sever a critical cable. Redundancy and shielding can mitigate this. You can compartmentalize loops and add Whipple shields, but every ounce of protection adds cost and complexity.

Servicing is the final boss. Today, nearly all satellites are “fly-till-they-die.” Similarly, the lifetime of an orbital compute node might be limited by whichever comes first: radiation wear-out, exhaustion of consumables (fuel for attitude control or reboost), or hardware failures.

Robotic refuelers and wrench-bots are in prototype, but no one has field-proven rack-swap at scale. It’s conceivable that a future orbital data center could be designed for modular upgrades or repair by a robotic servicer spacecraft.

- We could see a scenario in which compute modules are plug-and-play boxes that a service drone can swap out. This a pretty futuristic design, and not something we’re likely to see in early implementations.

Early orbital clouds will default to a “operate, deorbit, replace” model: design for a 5–7 year tour, then burn it up and launch Version N+1 with the latest silicon. That refresh cadence also curbs long-lived junk, a growing regulatory must.

Next comes the deorbit question. Under the 25-year LEO rule you must carry a deorbit kit—propellant, drag-sail, or both. Large masses can survive re-entry; planners will need targeted splash-downs, not roulette. All these responsibilities add to the engineering checklist.

Bottom line: a handful of compute cubes? Easy. A football-field cloud, rivaling Earth data centers in capacity? Not so easy…

…and not feasible without breakthroughs in on-orbit assembly, autonomous servicing, and smart, damage-tolerant design. Until these technologies mature, the winning strategy is keep it simple and plan for refresh. And reliability isn’t just a nice-to-have, it’s table stakes. Once the stack is overhead, nobody can stroll in with a replacement power supply.

Communications, bandwidth, and latency to earth: squeezing terabits through a straw

Last but certainly not least, we have the issue of moving data to and from Earth. After all, a compute center is only useful if you can get data into it for processing and get results out to the end users. This is where some first-principles thinking is needed: Earth-based data centers are wired into fiber networks with staggering bandwidth: terabits per second connections within and between data centers are common for cloud providers.

How could an orbital data center connect to its users or data sources?

We have touched on latency — it’s fundamentally a function of orbit altitude. Speed of light is finite (~300 km/ms), so even at LEO distances (~500 km), you have a few milliseconds one-way. A user on Earth might experience a 20–40 ms latency to a cloud server in another city, so an extra 5–10 ms to go to LEO and back is negligible in many cases.

To GEO, as discussed, ~240 ms round-trip is a different ballgame, acceptable only for certain use cases. So, an orbital service aiming to compete on latency will stick to LEO or maybe MEO — those can be comparable to terrestrial networks (OneWeb reported ~32 ms latency in tests for its ~1,200 km satellites).

Bandwidth, however, is a tougher constraint. A satellite must use either radio frequency (RF) links or laser (optical) links to communicate.

- RF is the traditional method (what most comms satellites use), featuring well-understood technology but limited spectrum.

- A single satellite downlink in, say, the Ka band (~20-30 GHz) might achieve 1–3 Gbps per channel with current tech—maybe tens of Gbps using multiple beams and advanced modulation—but there are practical limits before you run into regulatory and power issues.

- Terabits/second via RF would likely require many, many parallel links and a lot of transmit power (which then draws more electrical power).

- And spectrum is tightly allocated, with interference concerns and regulations constraining the use of wide bandwidths.

- Optical offers much higher potential data rates — in the tens of Gbps to potentially 100+ Gbps per beam. Plus, it isn’t limited by spectrum licenses.

- Laser communication terminals have been demonstrated (e.g., NASA’s Laser Communications Relay demo achieved 600+ Mbps back in 2013, and newer ones aim for multi-Gbps).

- Today, commercial players like SpaceX are regularly using laser crosslinks between satellites for high-speed data transfer.

For an orbital data center, we could envision high-speed laser downlinks to specialized ground stations.

But the catch — because there’s always a catch — is the atmosphere. Laser beams require a clear line of sight, which can be blocked or distorted by clouds, rain, or even turbulence.

- Typically, this is handled by having multiple ground stations around the world and hopping to whichever has clear weather at any given time, or placing them in arid high-altitude locations to minimize cloud cover.

- To ensure reliable connectivity, an orbital data center would need a network of ground entry points and an intelligent scheduling system to determine when to send what data where.

Even with lasers, scaling to the massive throughputs of a terrestrial data center is non-trivial. Imagine you want to routinely downlink petabytes of data (which is 1015 bytes). Even at a lofty 100 Gbps, down-linking 1 PB takes more than a day.

- A modern Earth data-hall can cough up that much before lunch—generating petabytes of data traffic daily among users or to facilitate storage backups.

- An orbital facility, then, might naturally prioritize workloads that are more self-contained or space-originating.

- We’ve already provided examples, such as processing spaceborne sensor dat and only sending down results. This greatly reduces the bandwidth needed to Earth (versus transmitting all the raw data).

Conclusion: Only serve Earth what it needs.

A key factor: who is the user?

If the orbital node is serving orbital clients (other satellites, stations, or servicing craft), then the comms can be space-to-space (RF or laser) and high-bandwidth, while also avoiding atmospheric issues. Earth-bound communications, however, force you through the weather lottery and into terrestrial backhaul.

- For a “space cloud” business serving satellite customers, maybe only a summary needs to come back to Earth.

- But if your users are on Earth, you could potentially integrate the data center with existing satcom networks.

- Maybe you link into a LEO constellation or through other relay satellites, which then distribute data to users. But stitching an orbital DC into Starlink or OneWeb adds hops and routing jitter, while standing up your own optical mesh multiplies capex.

To compete with terrestrial fiber networks, which can carry terabits per second cheaply, a space system will struggle. Free-space optical links have great bandwidth but require dealing with clouds or using airships/aircraft as relays above clouds. RF links are reliable through weather but far lower capacity. One might end up needing dozens of ground station downlinks spread around the world to collectively handle the data throughput of a single sizable orbital data node. This is doable—AWS and Azure, for example, are building global networks of ground antennas for satellite communication. But it adds operational cost and latency, since after landing at a ground station, the data might still need to travel via terrestrial network to the end user.

Dan Goldin

In essence, the comms pipeline is often the bottleneck that erases the advantages of space computing. This is true for many applications. If you can’t move data in our out quicky enough, even “unlimited” solar-powered processing won’t help you.

Therefore, we think orbital computing will converge on applications with high compute-to-data ratios — tasks that require minimal I/O (input/output) but intensive processing in between. This is ideal because you ship minimal bits over the link.

- Many scientific computations or AI model training runs have these characteristics (e.g., significant number crunching for some model weights or an insight at the end).

- Conversely, tasks like cloud gaming or interactive user-facing apps require constant back-and-forth and may not be efficient to host in space until communication technology improves dramatically.

Integration Headache Mitigation Playbook

- Compress or summarize at altitude. Ship answers, not raw data.

- Adaptive routing. Fire to whichever ground site has clear skies right now.

- Cache on-orbit. Burst traffic when conditions are pristine.

- Future bets. Multi-terabit laser crosslinks, airborne or GEO relays, maybe even in-vacuo fiber someday.

We see promising, active development in multi-terabit laser crosslinks and ground receivers (e.g., Europe’s EDRS system relays data from LEO to GEO via laser, then to ground via RF, bypassing local weather issues). The future might involve an internet-in-space backbone where data centers in orbit talk to relay satellites, which then connect to Earth’s fiber at a few key junctures.

All told, your orbital cloud is not a lone bird (or flock) but a node in a sprawling, weather-sensitive, capex-heavy telecom lattice. The cost and complexity of the network must be “priced in.”

- If your use case is, say, “provide cloud computing to remote oil rigs via satellite,” you’d integrate with existing satellite links to those rigs.

- If it’s “process Earth observation data,” you’d probably have the data center satellite itself downlink to polar ground stations already receiving imagery from satellites.

Each scenario will have a different optimal comms architecture. But none will enjoy the sheer ease and bandwidth of plugging into a fiber cable under a data center parking lot.

This is a brave new world for network architects. The diagram now involves moving orbital nodes, ground stations, and maybe lasers pointing through the sky.

Pulling the threads together

Space-based computing ventures sit at the nexus of orbital mechanics, aerospace engineering, and system design.

Orbit, power, heat, radiation, structure, debris, comms—each one of these is a non-trivial problem. Each is a boss you must fight on its own, and every victory makes some other monster stronger. Climb higher and you slay drag but resurrect latency and dose. Add shielding and you save the CPUs but sink the mass budget. Stretch the radiator wings and chips run cooler, yet your debris strike odds spike.

To succeed, you must solve all of these constraints at once. This perpetual trade-space is the reason we run Earth-bound clouds.

But the physics don’t say “impossible.” They say: pick your compromises carefully, pay every bill you can’t dodge, and iterate up the power curve, one hard-won kilowatt at a time.

Section 004

From kilowatt demos to megawatt dreams — what works this decades, what waits for the 2040s, and where the money hides in between.

Given the formidable challenges, at this point, you’d be right to ask…

…is space-based computing actually viable, and on what timeline?

The answer likely differs for the short term (next few years), medium term (next 10-15 years), and long term (beyond 15 years).

It’s important for leaders and investors to calibrate expectations accordingly — we need to be visionary yet grounded about what can happen when.

Act I · Crawl (Mid-2020s to early 2030s)

The first chapter is already being written in cramped cleanrooms and ISS lockers. Think suit-case payloads: a handful of COTS CPUs or a single FPGA board riding shotgun on an orbital imager or bolted to an ISS experiment pallet. Governments and startups are already developing and flying these small-scale systems in LEO, aiming to validate basic functionality:

- Can we keep COTS processors running reliably for a year in orbit?

- Can we downlink data and manage thermal cycles effectively?

These are high-value, low-bandwidth applications with equally surgical customers: a security user who wants to run missile-warning inference next to their sensor; a weather outfit that only needs to beam down fire alerts; a fintech institution willing to pay a premium for cryptographic keys stored forever out of reach.

Commercially, an enterprising company might offer “orbital edge computing” as a service for its fellow satellite operators: send your raw imagery to our compute sat(s), we’ll run your analytics and downlink the results.

The scale here will be on the order of kilowatts of power and perhaps a few teraflops (TOPS) of computing—effectively equivalent to a rack or two of servers on Earth, not the whole dang data center.

Remember: This is the “crawl” stage to work out kinks in radiation handling, thermal control, and autonomous operation. Launch costs, while dropping, are still significant. Anything going up must justify itself with a unique value proposition.

We do not expect broad adoption by mainstream cloud providers in this timeframe. It will instead be pioneers teasing out opportunities in specific verticals. Their early bets will shape best practices and technical standards (for power, cooling, networking in space), and build confidence if successful. They will also help shake out overly optimistic ideas, serving as reality checks on what’s truly achievable.

Anyone waving a business plan for a hundred-megawatt LEO cloud by 2028 is still pushing science fiction.

We’ve just painstakingly provided you with an honest accounting of the challenges that exist. The physics and unit economics won’t be conquered overnight. But investors, strategics, and savvy space agencies would be wise to think about the enabling technologies (advanced thermal systems, radiation-tolerant high-performance chips, or optical comms) in this phase that could pay dividends later.

Act II · Walk (Mid-2030s)

If pathfinders don’t fall on their faces, the next decade opens with constellations, not cubes. In 5-15 years, we might witness a fleet of computing satellites forming a rudimentary “cloud in space.” We could see multiple networks of dozens of LEO birds with 10 kW power and robust inter-satellite links, and these operational compute clusters consistently serving niche markets. Launch costs are projected to continue dropping. If Starship and/or reusable rockets from others succeed, we could see <$500/kg to LEO pricing, allowing operators to send up much larger payloads for far less.

At this moment — the (theoretical) moment of many clouds deployed in space — we shift from proof-of-concept to net-new capability. Data centers in orbit might still be small by Earth standards — perhaps equivalent to a single modest terrestrial data center in total capacity — but it would be uniquely compelling, in offering global reach and direct integration with space assets.

During this “Walk” phase, we might also expect government or commercial mega-projects to emerge if justified. Here, we move the goalposts again. For instance, a government might develop and fly a 100 kW compute bus in a 1,000-km sun synchronous orbit that never blinks and feeds live intel to commanders.

Such a platform might involve on-orbit assembly of multiple modules (testing out those capabilities). The cost would be high, but the payoff would be a strategic capability that’s impossible or uneconomic to otherwise achieve.

On the commercial side, if space mining or lunar development takes off in the 2030s, there could be a need for heavy computing support off Earth, which might be easier to supply from orbit than from ground stations given the distances.

We see a long list of enabling technologies that could support us in the “Walk” phase:

- Radiation-tolerant compute: Error-tolerant architectures, on-chip ECC everywhere, and AI-driven fault-recovery routines push COTS silicon toward multi-year uptime.

- Lightweight power systems: Ultra-thin solar blankets, lithium–sulfur / solid-state batteries, and early demos of satellite-to-satellite wireless power transfer shave kilograms per kilowatt.

- Next-gen thermal control: High-capacity loop heat pipes, variable-emittance radiator skins, and compact two-phase pumps give every extra watt a place to go.

- Autonomous assembly & servicing: Free-flying robot arms with magnetic end-effectors, snap-fit compute modules, and propellant-topping “tow trucks” turn one-and-done satellites into serviceable assets.

- Laser networking at scale: Multi-Gbps optical cross-links, adaptive cloud-hopping ground meshes, and inter-sat relays knit separate nodes into a single low-latency sky cloud.

Technically, this era is defined by derisking. This “Walk” phase is all about maturing the core Lego bricks that a larger orbital cloud would need.

Economically, this is also the first test of price parity: can a space CPU/GPU beat Earth on $-per-useful-inference? It might, if the workload is power-hungry on the ground and latency-tolerant across the sky.

If costs drop enough, perhaps some compute-intensive workloads will migrate skyward for the efficiencies or performance gains. This might include certain AI training jobs, cryptocurrency mining, and anything else mainly limited by power and cooling here on Earth.

We may see Fortune 500 early adopters and household names from the technology industry begin offloading some cloud services to orbit, especially through partnerships with space firms.

This is not a given. Earth-based infrastructure will also improve, and terrestrial power might get greener and more abundant if solar farms and/or fusion progress. But space will always have the appeal of no local emissions, fewer NIMBY issues, theoretically very high scalable power per unit area, and plentiful sunlight if you can harness it in huge quantities.

Act III · Run (2040s and beyond)

In the long run, if humanity continues on its current tech trajectory, space-based computing could become an extension of our planetary infrastructure. It will likely never replace Earth-bound hyperscalers wholesale, but could absorb the workloads our planet handles poorly and co-locate compute with off-world industrial/scientific endeavors.

If launch costs keep falling and in-space robotics mature, by the 2040s—or maybe the 2050s, if history slips a decade—orbital compute could evolve from experimental, exotic demos to a mainstay. Two potential energy scenarios might enable this transformation:

- Gigawatt solar platforms. Several space-based-solar proposals envision kilometer-scale arrays beaming energy home by microwave. Let’s assume a few are on orbit and that a single commercial power sat (beaming microwaves to Earth) has head-room to divert 2% of its output—tens of megawatts—to co-located racks before the beam ever leaves orbit. That makes an orbital “server annex” essentially a free-rider on the power bus.

- Compact fusion. If affordable, truck-sized fusion plants arrive — a concept that the Department of Energy and half of Silicon Valley are now funding. This allows us to skip the sun-angle gymnastics altogether. A 50-MW fusion pod parked in GEO runs 24/7, feeds compute directly, and dumps waste heat to radiators designed from asteroid aluminum rather than $10K/kg launch metal.

If Earth’s population and data demands keep rising, orbital computing could become a much-needed safety valve — an off-planet extension of the Internet that relieves terrestrial infrastructure. If that valve is proven to help us alleviate constraints on local land use, grid capacity, and cooling resources, we might see a tiered system emerge. Latency-critical tasks run on Earth or in LEO, while massive batch jobs run on orbital farms that beam results down as needed.

Cloud providers of 2050 might routinely decide whether a given workload runs in Arizona or Orbit. This is still highly speculative, of course, and it depends on many stars aligning: ultra-low launch cost, reliable space operations, and sufficient demand, to name a few.

Environmental considerations will only grow in importance. While orbital computing could help us shift energy-intensive tasks off-planet, it brings its own sustainability challenges. By the 2040s, robust space traffic management and debris removal systems will be essential to prevent Kessler Syndrome (a catastrophic debris cascade that could render certain orbits unusable for generations). Sustainable orbital infrastructure will require strict norms for responsible operations, including mandatory deorbit protocols and active debris cleanup.

Zoom Out—Gradual, Not Sudden