Ryan here, typing from a table at Two Hands on South Congress Ave in Austin, Texas…where every few minutes, two futures roll by me.

First comes a white Jaguar I-PACE with Waymo’s spinning lidar tiara, a familiar sight around here at this point (plus Phoenix, SF, & LA). Moments later, the new kid on the block glides by: a Model Y, virtually indistinguishable from the 200,000+ that Tesla sold last quarter, save for the cyberpunky “Robotaxi” badge on the front doors. At long last, the future is really here. It’s just not evenly distributed.

Same street, same heat, two very different bets on how a machine should navigate the world. Since last Sunday, Austin has emerged as the first live A/B test of autonomy at commercial (albeit limited) scale. Whether the Waymo and Tesla robotaxis are passing by you in the flesh or igniting dumpster-fire drag-out fights in social media comment sections, the split between them is hard to miss.

The Determinist, and the Probabilist

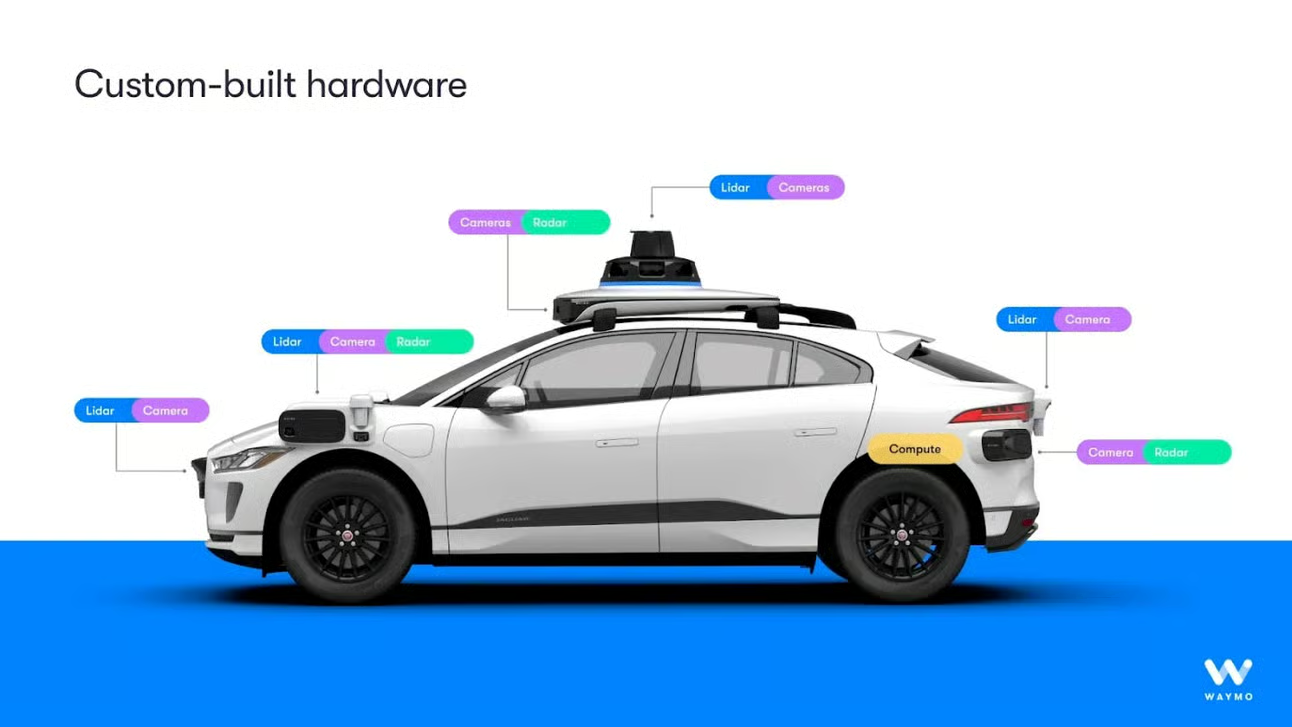

The two robotaxi providers are often cast as opposites, with Waymo’s map-anchored, sensor-rich hunt for certainty squaring off against Tesla’s fleet-trained, optical-vision-only bet on learned intuition.

In truth, this is an oversimplification. Both camps blend strategies. Waymo trains monster neural nets for perception and planning, while Tesla maintains a rulebook for certain safety cases (like yielding to firetrucks). But their core strategies feel worlds apart.

Waymo’s critics say it’s crafting the self-driving equivalent of a Swiss chronometer: exquisitely precise, over-instrumented, and uneconomic at scale. Tesla’s bet (strip hardware, scale the neural net) has its own detractors, who claim it will plateau at “good enough most of the time” but fall short of the ultimate redundancy and reliability required for Level 5 Autonomy. In sum…costly precision that (supposedly) will never scale vs. commodity hardware and scalable inference that (allegedly) may never conquer the last 1%.

Why should the rest of us care?

Because Waymos and Teslas going head-to-head at Terry Black’s Barbecue isn’t just a quirky Austin curiosity. They represent a choice that every complex, safety-critical system faces: balancing deterministic rules with probabilistic adaptability. Look at the world through this lens, and you’ll see examples everywhere:

- Power & grids: Protective relays must trip in micro-seconds (deterministic), while AI forecasters reshuffle storage and solar every five minutes (probabilistic).

- Commercial airliners: triple-redundant flight-control computers enforce hard limits on pitch, roll, and stall; adaptive algorithms optimize fuel efficiency on the fly.

- Semiconductor fabs: Nanometer-exact steppers etch patterns with precision-controlled machinery, then ML vision hunts for defects that no human inspector could catalog.

- Hospitals: Infusion pumps hard-lock dosage limits, but diagnostic models sift patient data to flag conditions doctors didn’t expect.

If that feels too abstract, consider your daily life: Your phone’s Face ID first runs rigid security checks before guessing it’s you. Your car’s ABS follows fixed logic, while adaptive cruise estimates other drivers. Social media algos strictly filter spam, before probabilistically recommending your next post. Even your lowly thermostat contains multitudes: a safety switch that kills the furnace if it overheats, with algorithms that adapt to your schedule and right-size your summer A/C bill. LLMs are the poster children of the probabilist, dynamically generating responses from trillions of possibilities…yet even these engines have deterministic guardrails.

You’ll start noticing this everywhere you look.

Last week, I was in Philadelphia to speak at a conference about our work at Array Labs. Later, another speaker — Vijay Kumar, dean of Penn Engineering — took the stage, and discussed the three distinct eras of robotics:

- Robotics 1.0 – scripted – you program the robot’s every move.

- Robotics 2.0 (now) – adaptive – probability-driven neural nets learn on the run.

- Robotics 3.0 (next) – semantic – tell the robot what you want to do in plain language and it figures out the rest. Andrej Karpathy and others have described this as the move to “Software 3.0,” where prompts displace code as the primary way to direct computational (and robotic) behavior.

Each generation builds on the previous one, creating convergent stacks: determinism as a rigid skeleton beneath more probabilistic muscle. Society’s agreement with autonomy relies on this balance. Lawmakers, insurers, and consumers want certainty, while engineers need latitude, because our world refuses to stay static or boxed in.

Which brings us to our anticlimactic conclusion our brains never really wanted…

The wetware processor (AKA, your brain) is wired to crave a Western showdown: laser cowboys vs. vision gunslingers, with only one riding off and the other left in the dust. Sorry, partner, but systems engineering rarely serves us such clean drama: this doesn’t end in a duel, but as an evolutionary handshake.

The rise of the probabilist marks a real shift, not just in how we build, but in how we trust, instruct, and coexist with machines that don’t always follow a script. These systems already earn their keep, spotting storm cells before we see clouds and flagging illness before symptoms surface, yet our confidence hinges on their disciplined willingness, in critical moments, to obey rules we’ve carved in stone (or hand back control entirely). We ask them to amplify our instincts, but never outrun our intentions. So, the most consequential systems (cars, reactors, agents, humanoids) aren’t built with either-or purity, but as a careful compromise.

Food for thought: As these systems evolve from tools into true partners and proxies, how much agency do we retain, vs. confidently delegate? Ultimately, assuming sustained progress, the true frontier may not be in defining what machines can do, but in deciding where their judgment ends and ours begins.

Off-Cuff

To bring it back to Two Hands…it’d be silly (and premature) to crown a robotaxi champion. But we can say this much: the eventual winner will be whichever outfit delivers a product that is equally affordable and unflappable. The spoils of the holy grail, Level 5 autonomy, will be measured in the trillions. And it may not be winner-takes-all: two (or more) players could cross the line at scale.

So, what do you think? We want to hear from the collective Per Aspera hive mind. So, we’re running an audience poll: a quick, early read on where the smart money (AKA, y’all) think the momentum is.